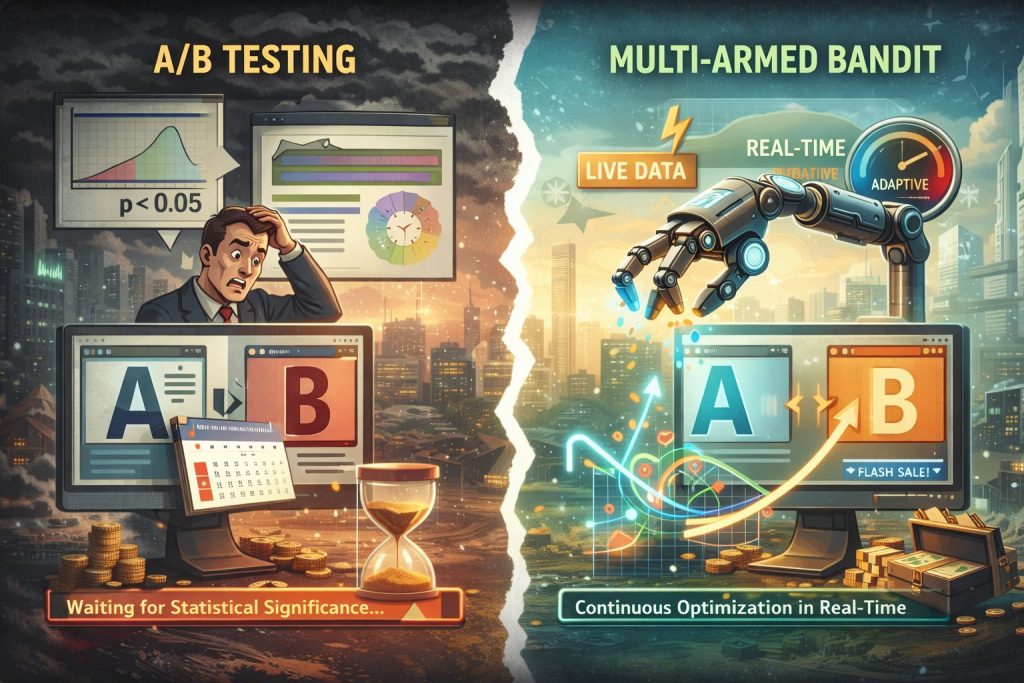

In the relentless pursuit of online optimization, businesses have long relied on A/B testing as the undisputed king of conversion rate optimization. For decades, the ritual was clear: split your audience, pit two versions against each other, patiently wait for statistical significance, and declare a winner.

But what if I told you this time-honored tradition is becoming a relic? What if the very principles that made A/B testing robust are now its greatest weakness in the age of dynamic, personalized, and real-time digital experiences?

The truth is, A/B testing isn’t just “showing its age”—it’s actively costing you money. Its static, patient approach is increasingly out of step with the velocity of modern business, leaving significant revenue on the table.

Enter the Multi-Armed Bandit (MAB), a sophisticated optimization strategy that’s not merely an upgrade, but a paradigm shift. If A/B testing is a meticulous but slow scientific experiment, MAB is an agile, always-on learning machine, constantly adapting and maximizing your gains.

The Opportunity Cost: The Silent Killer of A/B Testing

Imagine you’re running a crucial holiday sale with a limited window. You launch an A/B test for a new landing page headline, hoping to boost conversions. For two weeks, you dutifully split your traffic 50/50 between “Headline A” and “Headline B.”

During this period, unbeknownst to you, “Headline B” is performing 20% better than “Headline A.” Yet, because you’re committed to your 50/50 split until “statistical significance” is reached, you’re intentionally showing an inferior version to half of your valuable customers.

This isn’t just bad luck; it’s a fundamental flaw in the A/B testing model, known as opportunity cost.

A/B testing is designed for “exploration then exploitation”—you explore both options equally, then you exploit the winner. This means a significant portion of your traffic is sacrificed for the sake of certainty. In a rapidly evolving digital landscape, certainty often comes at too high a price.

The Multi-Armed Bandit, by contrast, operates on an “explore and exploit” principle. It continuously monitors performance and dynamically allocates more traffic to the better-performing variant as the experiment progresses.

Think of it like a seasoned gambler at a row of slot machines (the “one-armed bandits”). If one machine starts paying out more frequently, a smart gambler doesn’t keep pulling the lever on the losing machines equally. They shift their focus to the winner. MAB does exactly that, but algorithmically and at scale.

When “Statistical Significance” Becomes a Trap

The bedrock of A/B testing is achieving “statistical significance,” typically measured by a p-value. This ensures that your observed results aren’t just due to random chance. While vital for academic rigor, this pursuit of certainty often translates to:

- Fixed Horizons: A/B tests require a predetermined duration or sample size. You can’t just stop early if a winner appears obvious, because you risk false positives.

- Slow Decisions: Waiting for weeks to declare a winner means that by the time you implement your “optimized” version, market conditions might have changed. A new competitor, a holiday season, or even just a news cycle can render your carefully acquired data obsolete.

MAB, conversely, is an always-on, continuous optimization engine. It doesn’t wait for a “final answer.” It learns, adapts, and directs traffic to the best performer in real-time. This makes it incredibly powerful for:

- Short-Term Campaigns: For flash sales, daily promotions, or trending content, MAB can identify the winning call-to-action or image in hours, maximizing revenue within the limited window.

- High-Volume, Rapid Iteration: Websites with constantly changing content, like news portals, can use MAB to optimize headlines or featured articles for engagement on the fly.

Beyond the Winner: The Power of Contextual Bandits

Traditional A/B testing aims to find the global winner – the single best version for everyone. But in an era of hyper-personalization, this “one size fits all” approach is increasingly inadequate.

This is where Contextual Multi-Armed Bandits truly shine. A Contextual Bandit doesn’t just find a winner; it finds the winner for a specific user in a specific context.

Imagine you’re optimizing a product recommendation widget. An A/B test might tell you that “Widget A” performs better overall than “Widget B.” But what if:

- “Widget A” works best for users who arrived from social media?

- “Widget B” converts better for returning customers who arrived via email?

- “Widget C” is superior for first-time visitors using a mobile device?

A traditional A/B test would average these results, likely picking the overall best or missing these crucial nuances entirely. A Contextual Bandit, however, learns these relationships dynamically.

Instead of optimizing for an average user, it optimizes for each individual user, serving the variant most likely to convert them. This leads to genuinely personalized experiences and significantly higher overall conversion rates.

When A/B Testing Isn’t (Completely) Dead

To be intellectually honest, A/B testing still has its place, particularly for specific use cases:

- Major Structural Changes: If you’re completely redesigning your checkout flow or a core navigation element, an A/B test can provide clean, defensible data to understand the overall impact and rule out unintended negative consequences.

- Scientific Inquiry & Understanding “Why”: If your goal is to understand the causal relationship between a change and a user behavior for academic research or deep insights, A/B testing’s controlled environment can be superior.

- Highly Regulated Industries: In sectors where every change must be rigorously documented and justified, the clear “before and after” of an A/B test might be preferred.

However, for the vast majority of ongoing optimization – headlines, ad copy, button colors, product recommendations, content personalization, and real-time messaging – the Multi-Armed Bandit is the superior choice.

The Shift is Now: Embrace the Future of Optimization

The digital world is not static; your optimization strategy shouldn’t be either. Relying solely on A/B testing in 2026 is like bringing a horse and buggy to a Formula 1 race. It gets you there, eventually, but at an unacceptably slow pace and with significant missed opportunities.

The Multi-Armed Bandit offers:

- Faster Results: Maximize gains from the start, minimizing opportunity cost.

- Continuous Learning: Always adapting to changing user behavior and market conditions.

- Personalized Experiences: Delivering the right content to the right user at the right time.

Stop leaving money on the table. It’s time to retire the slow, static A/B test for day-to-day optimization and empower your business with the dynamic, intelligent power of the Multi-Armed Bandit. The future of conversion rate optimization isn’t about finding a winner; it’s about continuously being the winner.